Our Team Ranked 3rd* in Global VLN Leaderboard.

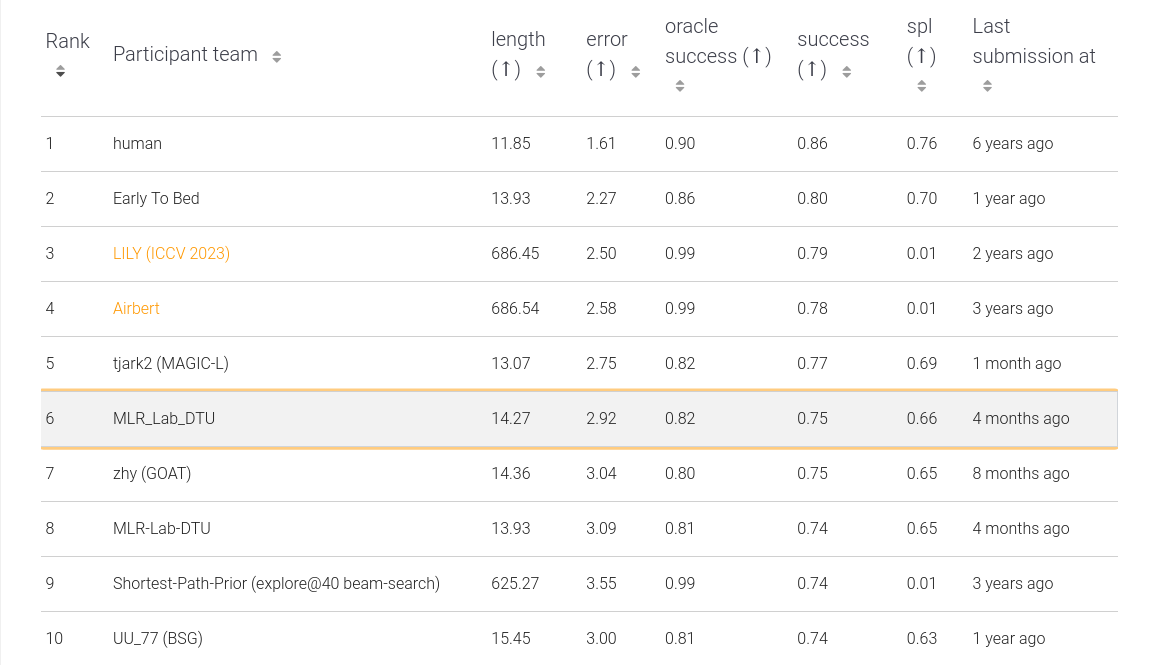

I’m am proud to announce that my work in Vision-Language Navigation(VLN) ranks at 3rd* position in Global VLN leaderboard rankings

* - Our Team at Machine Learning Reasearch Laboratory (MLR-Lab) at Delhi Technological University (DTU) which works on forefront of AI research, recently achieved yet another benchmark. We were able to score overall 6th in the leaderboard based on a variety of metrics [1]. However, some methods mentioned on the leaderboard such as Human benchmark, which is the performance of humans on the same task; pre-explored methods like AirBert and LILY, which pre-explore their environment to prepare a map and others on the leaderboard do not make sense with resepect to what we are trying to achieve and hence we have decided to exclude them and only count the relevant entries which followed the same rules of the task as we did. After following the above described process, we achieve an overall 3rd rank in Global VLN leaderboard.

Vision-Language Navigation is a rapidly growing in domain of Multi-modal learning, which requires expertise in multiple fields such as Computer Vision, Natural Language Processing and Reinforcement Learning. It requires an autonomous embodied agent to follow lingual directives combined with using availaible visual features of its environment to navigate sucessfully to its target in an unknown environment.

[1] Anderson, Peter, Qi Wu, Damien Teney, Jake Bruce, Mark Johnson, Niko Sünderhauf, Ian Reid, Stephen Gould, and Anton Van Den Hengel. “Vision-and-language navigation: Interpreting visually-grounded navigation instructions in real environments.” In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3674-3683. 2018.